NGINX (Pronounced as Engine-X) is an open-source, lightweight, high-performance web server, and proxy server. Nginx is used as a reverse proxy server for HTTP, HTTPS, SMTP, IMAP, and POP3 protocols, on the other hand, it is also used for server load balancing and HTTP Cache.

Nginx accelerates content and application delivery, improves security, and facilitates availability, and scalability for the busiest websites on the Internet.

In layman’s terms, Nginx is a kind of software used as a web server to serve large concurrent requests. Earlier we used to install Apache as a web server to handle those functions, but the world is growing and demanding more things at one time, the term concurrency came into action. Apache was falling behind handling those, and Nginx was introduced for the same issue. This website also uses Nginx as a web server, and I’m able to boost the performance by many times.

Where Did Nginx Get Its Name?

Here is words of Igor Sysoev…

In spring 2001 I had written Apache mod_accel that is enhanced replacement of mod_proxy. But it was clear that Apache has low scalability. I had read the c10k problem, had investigated existent servershttpd, boa, etc. and had decided that I need something like these servers, but with SSI, proxy, and cache support. Also it should has flexible configuration like Apache and supports online upgrade and quick log rotation.

As an aside, I’ve always been a bit curious about the name.

The base for name was NG letters those sounds like en-gee in English (I at least I think so :). X is simply fine letter. But ngx was already used many times. There were some variants – ngnx, nginx and nginex. Nginx seemed the better for me and I had look it in Google and found the only quote symbol.

Why Apache Is Slow? How Did Nginx Took Over?

Apache was introduced in 1995 when there was no concept of multitasking. Later, when there was need for multitasking, MPM (Multi-Processing Module) was added in Apache to overcome the issue. But with this new feature of multiprocessing module, memory consumption rammed up and started hogging up resources’ day by day; on the other hand, giant sites started receiving millions of hits every day.

So the need for a new web server or change in Apache was required to fix the issues.

This issue was named as C10K (Concurrent 10 Thousand) Problem.

Then Igor Sysoev started the development of Nginx in 2002 to overcome the same issue and the first time Nginx was publicly released in 2004. Nginx is lightweight and can handle large numbers of concurrent requests without hogging too many resources on servers.

It finally solved the problem of C10K.

But Nginx is not as dynamic as like Apache, as Nginx came up with totally new structure and settings. The .htaccess file replaced with *.conf file extension, and it requires a reload or restart to change.

Also Read: Apache vs. Nginx: Selection of a Perfect Web Server.

Now (in 2014) Nginx hosts nearly 12% (22+ Million) of active sites across all domains.

How does Nginx work?

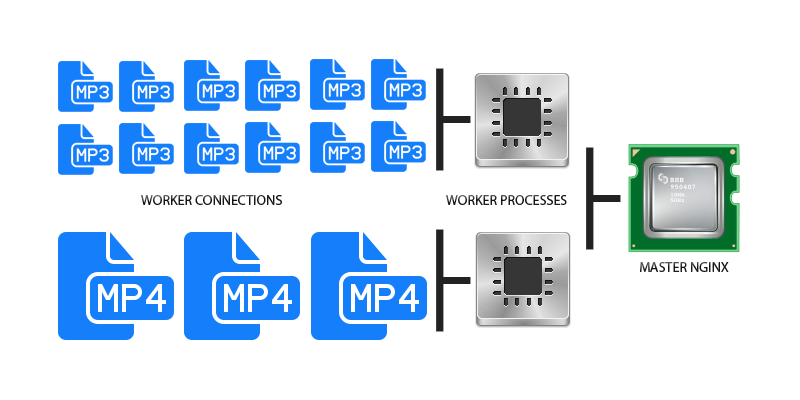

Nginx follows the event-based process; it does not create an individual thread of requests for each process as Apache does, but smartly follows process events. Below is a demonstration of an Nginx server handling concurrent MP3 and MP4 file requests.

Nginx divided its job into Worker Connections and Worker Processes. Here worker connections manage the request made, and the response obtained by users on the web server; at the same time, these requests are passed to their parent process which is the Worker Process.

A single worker connection (See in Diagram: Worker Connections) can handle around 1024 connections at a time (can be increased). It is the greatest ability of a worker connection.

There can be “n” numbers of the worker process in Nginx based on the type of server you have, and each worker process handles different jobs; so it can handle more concurrent requests.

Finally, the worker process transfers the requests to the Nginx Master Process, which quickly responds to the unique requests only. Here you can even control whether you want to accept one by one request or wants to accept as many as requests possible which is experimental things.

Also Read: Improve Nginx Performance

Nginx is Asynchronous; that means each request in Nginx can be executed concurrently without blocking each other like a water pipe. So this way, Nginx enhances the virtually shared resources without being dedicated and blocked to one connection. That is why Nginx can do the same work with less amount of memory and utilizes that memory in an optimized way.

Leave a Reply